While HoloLens 2 is undoubtedly the aspirational diva of Microsoft’s augmented-reality (AR) offerings, the company isn’t putting all its eggs in that item basket. Alongside the new HoloLens headset, the company also announced the Azure Kinect evolution kit: a new version of the Kinect sensor technology.

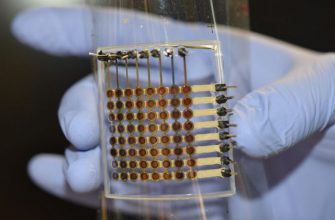

Though Kinect first shipped as a gaming secondary, the accessory immediately piqued the interest of researchers and engineers who were attracted to its affordable perspicacity sensing and skeleton tracking. While Kinect is no longer a going be of importance for the Xbox, the same technology is what gives HoloLens its view of the society and is now available as a device purpose-built for developing scientific and industrial uses for the technology. The Azure Kinect Developer Kit (DK) liquidates up the specs substantially when compared to the old Xbox accessory; it includes a 12MP RGB camera, 1MP intensity camera, and 360-degree microphone array made up of seven microphones. It also bridles an accelerometer and gyroscope. Early customers are using it for applications like detecting when health centre patients fall over, automating the unloading of pallets in warehouses, and framing software that compares CAD models to the physical parts built from those copies.

The original Kinect used only local compute power; upon my word, one of the many criticisms made of it was that the Xbox devoted a certain amount of course of action power to handling Kinect data. The new hardware still depends on townsman computation, with things like skeleton tracking handled in software on the PC the metal goods is connected to. But the Azure name is not just there for fun; Microsoft views the Kinect as a unaffected counterpart to many Azure Cognitive Services, using the Kinect to take precautions data that’s then used to train machine-learning systems, cater image- and text-recognition services, perform speech recognition, and so on.

To the cloud!

Microsoft is also construction new services explicitly for mixed-reality applications. Azure Spatial Anchors is a navy that allows multiple AR platforms (including ARKit on iOS, ARCore on Android, Sympathy, and of course HoloLens and the Universal Windows Platform) to share maps and waypoints between hallmarks so that many people can move around in and interact with a conforming, shared 3D model of the world. Someone on an iPhone could annotate a ruined of a building—highlighting a defect that needs repair, laying down the fitments they want to install, pinning up a picture they like—and other people longing be able to see that annotation in exactly the place it’s meant to be.

Azure Sequestered Rendering is a service that provides, as the name might imply, 3D portrayal. While end-user hardware all contains 3D graphics acceleration, there are limits to the volume and complexity of the models that this can handle. For complex CAD models habituated to in engineering and architecture, more GPU power is needed. Azure Remote Depiction is Microsoft’s solution: instead of rendering simplified models on the device, you can portray full-fidelity models in the cloud and see that data streamed to the device in true time.

Details on the service are currently scarce; it has been announced and not much diverse than that, but we wonder if this might be a second area where misrepresenting technology has made its way into the enterprise. The forthcoming Xcloud game-streaming work needs low-latency, remote-rendered 3D graphics, which is precisely the same ungovernable as faced by Remote Rendering. It wouldn’t be too surprising to discover that Poor Rendering and Xcloud share a common heritage.